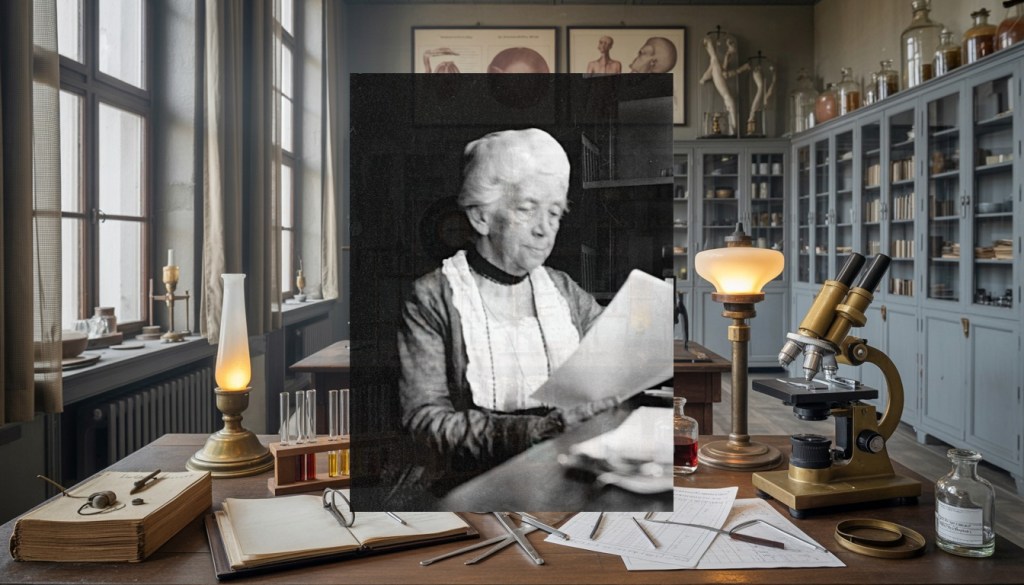

Christine Ladd-Franklin (1847-1930) was an American mathematician, logician, and psychologist whose interdisciplinary genius reshaped how we understand logical reasoning and human colour perception. Refusing to be confined by gender barriers or disciplinary boundaries, she developed the antilogism – a revolutionary logical method that reduced Aristotle’s fifteen valid syllogistic forms to a single, elegant principle – whilst simultaneously advancing colour vision theory through an evolutionary framework that modern neuroscience would vindicate decades later. Her story is one of extraordinary intellectual achievement paired with institutional cruelty: she completed all requirements for a PhD in 1882, yet Johns Hopkins refused to grant it because she was female, finally awarding the degree in 1926 when she was seventy-eight years old and too ill to attend the ceremony in person.

Today, over a century after her most transformative work, Ladd-Franklin remains oddly peripheral to the histories that should centre her. The antilogism is taught in logic courses worldwide, yet students learn it divorced from her name. Neuroscience confirms her colour vision synthesis as brilliantly prescient, yet textbooks credit everyone but her. Her refusal to accept an honorary degree – demanding instead the earned credential stolen from her – stands as a quiet act of defiance against a system designed to erase her. In an era when women remain underrepresented in mathematics, logic, and neuroscience, and when credential gatekeeping continues to determine whose contributions count, Ladd-Franklin’s life illuminates both the possibility of intellectual breakthrough and the machinery of institutional indifference.

It’s an honour to speak with you, Dr Ladd-Franklin. I must say that honour comes with particular weight today – your earned doctorate, delayed by forty-four years, represents one of the most peculiar forms of institutional cruelty on record. When Johns Hopkins finally offered you that honorary degree in 1926, you were, by all accounts, unwell. Yet you refused it and demanded what was rightfully yours. I wonder what moved you to take that stand at such cost to yourself.

The refusal was not difficult, I assure you, though perhaps my frailty made the gesture more pointed than I intended. An honorary degree is a consolation offered by those who wish to absolve their guilt without admitting culpability. It says, “You have achieved enough that we are now willing to pretend our original decision was somehow immaterial.” But it was not immaterial. It was the entire architecture of my life – every door closed because I lacked the credential they denied me.

When I completed my dissertation in 1882, I had satisfied every requirement imposed upon my male colleagues. I attended lectures, conducted research, developed novel theory, and published work of sufficient originality that Charles Sanders Peirce included it in his Studies in Logic. The only variable that differed was my sex. So when they offered the honorary degree forty-four years later, I could not accept it. To do so would be to ratify their premise that I had somehow been deficient, that I required a compensatory gesture rather than the restoration of what had been systematically withheld. I demanded the earned degree. It was a small victory, but it was real.

And did you receive it?

Eventually. After considerable correspondence and, I suspect, some discomfort on their part. The university capitulated. I was awarded my PhD in absentia – I was too unwell to travel for the ceremony – but the degree is real, earned, dated correctly to 1882. Four years later, I died. The timing was not fortuitous, but it was satisfying.

Before we discuss the larger architecture of those exclusions, I’d like to understand how you arrived at your most celebrated intellectual contribution: the antilogism. Your 1883 dissertation was a radical reconceptualisation of how we test logical arguments. Can you walk me through your thinking – how you came to see that all fifteen valid syllogistic forms could be collapsed into a single principle?

The question that haunted me during my studies at Johns Hopkins was deceptively simple: why are there fifteen valid forms of the syllogism? The medieval logicians had catalogued them, named them – Barbara, Celarent, Darii, Ferio, and so forth – but the enumeration felt arbitrary. There was no unified principle governing their validity. Each form seemed to require its own demonstration.

I was reading Aristotle closely, and I noticed something peculiar about how he demonstrated validity. He used what he called “reduction” – taking an apparently valid form and converting it into a simpler, more obviously valid form. But the reduction was asymmetrical. The premises were treated as given, the conclusion as the element to be tested. And I thought: what if we inverted that relationship entirely?

Here is the insight: a valid syllogism, properly understood, is one in which the conclusion cannot be false whilst the premises are true. That is a tautology – a logical necessity. But what if we took the contradictory of the conclusion – that is, what is logically opposite to what we are trying to prove – and combined it with the two premises? Then we would have three propositions. And here is the beautiful part: if the original syllogism is valid, these three propositions will be mutually inconsistent. They cannot all be true. They form what I termed an “inconsistent triad” or, more formally, an “antilogism.”

This transforms the test for validity into something elegantly symmetrical. Instead of asking “Does the conclusion follow from these premises?” we ask “Can these three propositions – the two premises and the contradictory of the conclusion – all be true simultaneously?” The answer is always no for a valid syllogism. No asymmetry. No privileging of premises over conclusion. Simply: inconsistency.

When you say “antilogism,” what precisely are the mechanical steps a logician would follow to test, say, Barbara – the classic All M is P; All S is M; therefore All S is P?

Excellent. Let us use Barbara concretely.

We have:

- Premise 1: All M is P (All humans are mortal)

- Premise 2: All S is M (All Greeks are human)

- Conclusion: All S is P (All Greeks are mortal)

Now, take the contradictory of the conclusion. The contradictory of “All S is P” is “Some S is not P” (Some Greeks are not mortal). We now have three propositions:

- All M is P

- All S is M

- Some S is not P

The antilogism test asks: can these three all be true? Can everything human be mortal, everything Greek be human, and yet something Greek fail to be mortal? No. This is impossible. The three propositions are mutually exclusive. They form an inconsistent triad. Therefore, the original syllogism is valid.

Now test an invalid form – say, Darapti, the classic “All P is M; All S is M; therefore All S is P” (All plants are mortal; All stones are mortal; therefore all stones are plants). Take the contradictory of the conclusion:

- All P is M

- All S is M

- Some S is not P (Some stones are not plants)

Can these three all be true? Yes, absolutely. Plants could be mortal, stones could be mortal, and yet stones could still not be plants. There is no inconsistency. Therefore, the original syllogism is invalid.

The elegance lies in the symmetry. Whether the argument is valid or invalid, the test is identical. You convert to an inconsistent triad and ask whether inconsistency obtains. Reduced to its essence, all logical validity – all fifteen forms – hinges on this single principle: the test of inconsistency.

Arthur Prior, the logician you mentioned, called it the solving of “a problem first raised by Aristotle which had baffled logicians for two thousand years.” I do not claim credit for inventing the principle of non-contradiction – that is Aristotle’s great discovery. But recognising that all syllogistic validity reduces to the recognition of inconsistency, and that this reduction could be made mechanically, symmetrically, and therefore teachable in a single, unified form? That was mine.

And yet, when I look at introductory logic textbooks today – nearly 140 years after your publication – many simply do not mention you or the antilogism by name. The technique is there, but divorced from its creator. How do you account for this erasure, particularly given that contemporary logicians like Prior explicitly praised your work?

This is where we must speak plainly about the machinery of intellectual theft – or, to be more generous, the machinery of forgetting that operates when the creator’s authority is already questioned by virtue of sex.

When I published the antilogism in 1883, it was noticed. Prior and others recognised its value. But I was simultaneously excluded from the institutions where ideas are institutionalised – where they are transmitted to successive generations through formal instruction, where they are embedded in textbooks, where they are credited in bibliographies. I held no permanent academic position. I could not establish a research programme or train students. I could not hold the kind of intellectual authority that ensures one’s name remains attached to one’s discoveries.

Bertrand Russell knew my work and engaged with it, though not always charitably. But Russell, a man, could publish in prestigious venues, establish himself at Cambridge, and ensure his ideas – and his name – were woven into the canonical history of logic. When Russell’s Principia Mathematica established predicate logic as the dominant paradigm in the early twentieth century, older logical frameworks – including the Boolean tradition within which I worked – began to be treated as quaint predecessors.

The antilogism belongs to an older language of logic, using class relations rather than propositional quantifiers. When the discipline shifted, my work was not translated into the new language; it was simply left behind. And because I had no institutional power to resist that abandonment, my name followed my work into obscurity.

But I wonder if there is something more insidious. A man’s work survives institutional shifts because the man himself is presumed to have intellectual authority. His ideas are deemed foundational even if the formalism changes. But a woman’s work? It is more easily permitted to become a relic, a curiosity, something overtaken by history. There is no presumption of enduring significance to protect it.

I have made peace with this. Or, more truthfully, I have not made peace with it, but I have accepted it as the cost of refusing to be silent.

Let us turn to your colour vision work, which took you to Germany in 1891 to study under Helmholtz and Müller. This was a remarkable pivot – from pure logic to experimental psychology and neuroscience. How did that transition come about, and what problem were you trying to solve?

My work in logic was complete by 1883. I had said what I needed to say about the structure of validity. But I had always been drawn to questions that logic alone could not answer – questions about how the mind works, not merely how arguments should be structured. I spent years reading in psychology, in physiology, in evolutionary theory. Darwin was, for me, a constant companion. His framework for understanding how complex systems emerge through processes of adaptation and survival seemed applicable everywhere.

In the early 1890s, I became aware of a genuine crisis in colour vision research. There were two competing theories, both with empirical support, and neither could fully account for the evidence. Young and Helmholtz had proposed that the eye contains three types of colour receptors – red, green, and blue – and that all colour perception arises from the stimulation of these three cone types in different proportions. This explained how three receptors could generate the full spectrum of colour experience we perceive. A beautiful theory.

But Hering came along with a different framework. He noticed that colour perception involves opposition – we perceive red-green contrasts, blue-yellow contrasts, and light-dark contrasts. Moreover, people cannot perceive a colour that is simultaneously red and green, or simultaneously blue and yellow. Hering argued that the visual system must encode colour through opponent processes – cells that respond to one colour by inhibiting response to its opposite. This, too, was elegant and well-supported by evidence.

The problem was that these theories seemed to contradict one another. Helmholtz’s trichromacy and Hering’s opponent-process theory appeared mutually exclusive. One or the other must be correct – or so the field believed.

I became convinced that both were correct, but that they operated at different levels of the visual system. That is what drew me to Germany and to the laboratories there. I needed to understand the physiology in detail.

And what was your proposed synthesis?

I proposed that colour vision evolved in three stages, each stage building upon the previous one, each stage visible in the present structure of the retina if one looks carefully.

The most primitive stage – found still in the peripheral retina and in simpler organisms – is pure achromatic vision: light and dark, luminance without colour. The rods of our eye, which mediate this primitive form of vision, are the evolutionary foundation.

The second stage is blue-yellow sensitivity. At some point in evolutionary history, one class of photoreceptor diverged to become sensitive to shorter wavelengths (blue) and another to longer wavelengths (yellow), whilst the original achromatic system remained. This is an intermediate stage visible in the colour-matching behaviours of certain animals and in our own ability to perceive blue-yellow distinctions with relatively good consistency across observers.

The third and most recent stage is red-green sensitivity. The cone system that mediates this discrimination is the most recent evolutionary development. This is why red-green colour blindness is so prevalent – affecting approximately eight per cent of males – whereas blue-yellow colour blindness is far rarer, and complete colour blindness rarest of all. The most recent adaptation is the most vulnerable to disruption.

Now, here is the crucial insight: this three-stage evolutionary model resolves the theoretical conflict. At the level of the photoreceptors – the cones themselves – Young and Helmholtz are correct: we have three types of cones, each sensitive to different wavelengths. But at the level of neural processing – how the brain interprets the signals from these three cone types – Hering is also correct: the system uses opponent processes. Red versus green, blue versus yellow. The apparently contradictory theories are actually describing different levels of the visual system’s organisation.

The trichromatic receptors feed into opponent-process neural channels. The receptors give us the raw data; the neural circuits of the brain interpret that data through a logic of opposition. Both mechanisms operate simultaneously, neither negating the other.

This is genuinely prescient. Modern neuroscience – cellular recording from the 1960s onward – has confirmed precisely this architecture: three cone types in the retina feeding into red-green and blue-yellow opponent channels in the lateral geniculate nucleus and primary visual cortex. Your theory preceded the experimental confirmation by decades. Did you anticipate that the opponent processing might be occurring in the brain rather than in the eye itself?

I suspected it. The mathematics suggested it. If the opponent processing were happening in the retina itself – if the three cones were already being recombined into opponent signals – then we would expect certain asymmetries in colour perception that we do not observe. The system’s behaviour suggested that the raw trichromatic signals were being transmitted to the brain, where they were then reorganised into opponent channels. The retina appeared to be doing one thing; the brain another.

But I must be honest: this was inference rather than observation. I had no access to the neurophysiological recording equipment that would later confirm it. I was reasoning from behaviour, from colour-matching experiments, from comparative anatomy. I was correct, but I was correct provisionally, pending technology that did not yet exist.

This is perhaps the particular curse and gift of theoretical work: you can perceive the structure of a problem before you can prove it. You become confident in conclusions that remain speculative. And then you must spend years waiting for empirical methods to catch up to your thinking.

Did that waiting prove difficult?

It was maddening. I published my synthesis in Colour and Colour Theories in 1929, near the end of my life. By then, I had spent decades seeing my theoretical framework confirmed by piecemeal experiments – one discovering one aspect, another confirming another. But I never had the luxury of announcing a complete vindication. The scientific establishment moved at its own pace. Women’s theoretical work was treated with suspicion; it required enormous empirical weight to overcome that suspicion.

I should add: I was not always correct. My insistence on the evolutionary hierarchy – that red-green sensitivity was the most recent adaptation – was based on reasoning about the prevalence of red-green colour blindness. But I could not trace the actual genetic or developmental mechanisms. I was constructing a narrative that fit the available data. Subsequent research has shown that the evolutionary history is more complex than I posited. The three cone types did not emerge in the neat temporal sequence I described. My framework was productive and generative, but it was also, in some respects, a scaffold that newer research would need to rebuild.

I am comfortable with that. Science is iterative. My contribution was recognising the conceptual possibility of reconciliation between competing frameworks. Others did the detailed work of proving it.

Let me ask about your experience at Johns Hopkins itself. You arrived in 1878 having signed your fellowship application “C. Ladd” – deliberately concealing your gender. When the university discovered you were female, they attempted to revoke the offer. But James Joseph Sylvester, the mathematician, insisted you be admitted. What was that experience like?

It was humiliating and exhilarating in equal measure – a contradictory state I would become familiar with throughout my life.

I signed the application “C. Ladd” for a single, practical reason: I knew that an application signed “Christine Ladd” would be rejected unread. There was no conspiracy in this; I was being realistic. I had read enough institutional histories to understand that universities did not admit women to graduate study, particularly not in mathematics. The convention was that women were intellectually suited for teaching at the primary level, perhaps at finishing schools, but not for original research or advanced theoretical work.

My father had died two years prior. My circumstances were constrained. I needed education. The most direct path was to present myself in a form that would be considered. Once admitted, I reasoned, the work itself would speak.

When they discovered I was a woman, the university’s embarrassment was immediate and visceral. How could they have made such an error? The trustees moved to revoke the fellowship. I remember the acute shame of that moment – not shame at having misrepresented myself, but shame that the university was so distressed at its own mistake.

Sylvester intervened. He was an extraordinary man – Jewish, himself subject to discrimination, someone who understood that genius and character were not distributed according to the categories the academy prescribed. He insisted that the offer stand. He said, in effect: if the work is sound, then the person producing the work belongs here. The university capitulated, though with conditions. My name was omitted from official circulars. I was not permitted to use the title “fellow,” though I held the fellowship. I attended lectures but was not formally acknowledged as a student. I was a presence that was simultaneously real and officially erased.

That institutional dishonesty must have been extraordinary – to occupy a space whilst being denied recognition within it.

It was clarifying. It taught me that institutional credibility is a fiction – a useful fiction, perhaps, but a fiction nonetheless. The university could grant me access whilst denying me recognition, because the recognition was what conferred legitimacy. But the access was real. I learned, I worked, I generated ideas. What the university chose to acknowledge or deny did not alter the fact of my intellectual activity.

I became adept at existing in that liminal space. I completed my dissertation. I was published in respectable journals. And I was simultaneously, officially, invisible. It was a strange existence – the work was visible, but the worker was not.

One learns to tolerate contradictions.

And yet when you sought an academic position after completing your PhD requirements, you were denied. The university did not offer you a faculty appointment until 1904 – more than twenty years later. What happened in those intervening decades?

Ah, but here we must speak about marriage, and the particular cruelty of how institutions wielded anti-nepotism doctrine to exclude women.

In 1882, I married Fabian Franklin, a mathematician of considerable talent. It was a genuine partnership – we read each other’s work, we discussed problems together, we were intellectual companions. But his appointment at Johns Hopkins created an immediate barrier to my own employment. Anti-nepotism rules, you understand, were designed – or so they claimed – to prevent the corruption of academic merit by familial favouritism. Fair enough, perhaps. But these rules were enforced asymmetrically.

When a man married a woman, the man’s career proceeded unimpeded. The wife either did not work, or she worked in some subordinate capacity – as an assistant, a secretary, a volunteer. The assumption was that she would follow his trajectory, support his career, and her own intellectual work would be secondary. Indeed, it was expected to be secondary.

But when a woman was already established – or attempting to become established – in academic work, and she married a man in the same institution, the rules suddenly became immovable. I was barred from teaching at Johns Hopkins because my husband worked there. Fabian could have left, and then I might have been permitted to stay, but that would have damaged his career for the sake of mine. Such sacrifices were not expected of men; they were not even considered possible.

So I taught elsewhere. Columbia offered me a position – unpaid, of course. Chicago did as well. At some institutions, I was permitted to give occasional lectures. But these were precarious arrangements, year to year, always on the margin. The “financial strain” was genuine. We had some means because of Fabian’s salary, but the professional precariousness was real. I could not establish a research programme. I could not supervise doctoral students. I could not build intellectual legacy in the way male colleagues could.

Then, when I was fifty-six years old, Johns Hopkins finally permitted me to teach one class per year, and it was unpaid. One class. That was the recognition they granted me.

What stands out is that the anti-nepotism rule was weaponised against you despite the fact that you and Fabian were genuine intellectual partners. But the rule as applied assumed that women would be the subordinate partner by default.

Precisely. The rule was designed to be gender-neutral in its language. In its application, it was devastatingly gendered. Had I been male and married a woman scholar, nothing in my career would have been disrupted. I would have taught, published, advanced. She would have been expected to accommodate my career. Instead, my career was sacrificed to the appearance of institutional integrity.

The cruelty was that it was not malicious, precisely. It was worse than that: it was bureaucratic. No individual chose to harm me. The rules simply operated, and I was the collision point between well-intentioned principles and their implementation by a patriarchal institution. I could not argue with any single person; I was arguing against a machine.

And yet you persisted. You continued to publish, to work, to contribute ideas. How did you maintain the motivation to do so when the institutions were so clearly designed to prevent your advancement?

Stubbornness, I think. And rage, though I learned not to display it openly. And the simple fact that the work itself was more interesting to me than the recognition was crushing to me.

I wanted to understand how the mind perceives colour. I wanted to understand how logical argument works. These questions did not cease to be interesting because a university refused to acknowledge my contributions. If anything, the refusal made them more important. I was not doing the work to gain a title or a salary – I had already been denied those. I was doing the work because the work itself was real and necessary.

But I should not sentimentalise this. The lack of institutional support did have consequences. I could not train students as I wished. I could not establish the kind of intellectual continuity that ensures a field remembers you. I published in journals; I was read by contemporaries; and then, as the next generation of scholars took over, many of them did not cite my work. They may not have even known it was mine. My name was omitted from standard histories.

The effort to maintain productivity despite the institutional barriers was not noble. It was survival. And it was also, I will admit, somewhat costly. There were times I was exhausted – not from the work itself, but from the constant need to assert my right to do the work.

I want to return to your colour vision work, because there’s a particular detail I’m curious about. You tested your theory experimentally in German laboratories. What did those experiments look like? What was your method?

The work centred on what we call colour-matching experiments. These are deceptively simple in conception but quite demanding in execution.

A subject sits in a darkened room before a viewing apparatus. On one side, they see a sample colour – say, a particular shade of orange. On the other side, they have three adjustable coloured lights: red, green, and blue. The task is to adjust the intensity of those three lights until the mixture appears identical to the sample colour. By recording the intensities required, we can create a mathematical description of that colour in three-dimensional space.

Now, here is where the evolutionary framework comes in. If you test the colour-matching ability across the entire retina – not just the central fovea where vision is sharpest, but also the peripheral regions – you find systematic differences. In the peripheral retina, the ability to distinguish red from green diminishes dramatically. Blue-yellow discrimination remains relatively intact. And at the very periphery, one enters a zone where the visual system is essentially colour-blind – everything appears in shades of grey.

This matches the anatomical structure perfectly. The fovea, the small central pit of the retina where visual acuity is greatest, is packed densely with cones. As you move outward, the density of cones decreases, and the density of rods – the achromatic receptors – increases. The peripheral regions are dominated by rods.

So the question becomes: why is this anatomical arrangement so prevalent? Why do so many species have colour vision concentrated in the fovea and achromatic vision in the periphery?

My hypothesis was evolutionary: red-green sensitivity is the most recent adaptation. It emerged last, which means it had not yet diffused throughout the retina. Blue-yellow sensitivity was intermediate – more recent than achromatic vision, older than red-green. Achromatic vision is the oldest, which is why it dominates the peripheral vision and why all animals, even those without colour vision, maintain some form of brightness discrimination.

The testing was laborious. We conducted dozens of subjects, mapping their colour-matching abilities across different retinal locations. We used standard colour samples. We controlled for lighting conditions, pupil size, fatigue. The variability was substantial, but the pattern held. The peripheral retina is indeed progressively more colour-blind as one moves outward, in precisely the sequence I predicted.

And this was novel at the time?

Very much so. Previous research had noted that peripheral colour vision was poor, but the explanation had been either mechanical – “the cones are more densely packed in the fovea” – or functional, but without evolutionary grounding. I was proposing that the anatomy itself was a record of evolutionary history. The structure of the eye was not an accident; it was a chronicle of how the organism had adapted to its visual world over millions of years.

This required bringing together anatomy, physiology, psychology, and evolutionary theory. That sort of synthesis was not common then, particularly from a woman. Men worked in disciplines; women were permitted to dabble in philosophy but not to create genuine theoretical frameworks that bridged fields.

I have to ask: when you were excluded from Edward Titchener’s Society of Experimentalists, how did that affect your work? Titchener claimed that women’s presence would interfere with the informal atmosphere. It was the most explicitly exclusionary justification.

That was a profound wound. I was a pioneering experimental psychologist – I had developed novel methods for testing colour perception, I had conducted rigorous experiments in two countries, I had published on psychophysical measurement. And Titchener, who was establishing himself as the authoritative figure in American experimental psychology, created a society specifically designed to exclude people like me whilst claiming it was about “atmosphere” and “informality.”

It was not about atmosphere. It was about power. The Society of Experimentalists was where ideas were shared, where collaborations were forged, where the next generation of psychologists was recruited. By excluding women, Titchener was ensuring that women remained peripheral to the discipline that was claiming to be the most objective, the most scientific, the most rigorous. The exclusion was masked in language about comfort and informal socialising, but it was naked gatekeeping.

I protested loudly. I do not believe in accepting such things quietly. But my protest changed nothing. The society remained all-male. Men attended their smoke-filled meetings and formed their collaborations and made their plans for the future of psychology, and women remained on the outside, doing excellent work that was invisible to the networks of influence where decisions about what counted as important research were made.

Did you find allies among other women scientists or psychologists?

Some. There were women doing fascinating work in psychology, in particular. But we were scattered, not institutionally supported, often working in isolation or in academic backwaters. We corresponded, we cited each other’s work, we tried to support one another. But we did not have the structural advantages men possessed. No matter how brilliant any individual woman was, she could not replace the systemic advantage of belonging to an established network.

I was more fortunate than many because I had Fabian as an intellectual partner, even if his presence at Johns Hopkins prevented my own employment there. He read my work, he discussed ideas with me, he helped me think through problems. Not every woman had such support. Many were completely isolated.

Let me raise a critique of your colour vision theory that emerged later. Some researchers questioned whether the evolutionary sequence you proposed – achromatic to blue-yellow to red-green – was accurate. Recent genetic studies suggest the evolutionary history was more complex. Do you have any thoughts on those challenges?

I have been thinking about this, now that I have access to research I did not possess in life. I was constructing a narrative that fit the behavioural and anatomical evidence available to me. I proposed a linear, sequential evolution because that was the parsimonious explanation for the apparent hierarchy of colour vision abilities in the human retina. But I was reasoning with incomplete information.

The genetic evidence suggests that the evolution of colour receptors involved more duplication, divergence, and modification than I imagined. The red and green cones are particularly similar genetically – they may have diverged from a common ancestral green receptor relatively recently. The blue cone has a different evolutionary history. My neat three-stage sequence was, I think, a productive simplification rather than a precise account of evolutionary mechanisms.

This is a genuine limitation of my work that I can now acknowledge. I was doing the best theorising possible with the tools available. But I was not doing evolutionary genetics, because that field did not yet exist. I was doing evolutionary inference based on comparative anatomy and behavioural evidence. Those are different enterprises.

The important insight that has held up is the principle of integration: that trichromatic receptors and opponent-process neural coding are not contradictory mechanisms but rather complementary levels of a unified system. That insight remains valid even if the specific evolutionary narrative requires revision. But I should have been more cautious about claiming certainty regarding the evolutionary sequence.

That’s a generous and intellectually honest admission. I wonder if you have thoughts on why your work in colour vision has been so thoroughly excluded from the history of neuroscience, even though the basic framework – three cone types feeding into opponent neural channels – has been so thoroughly validated.

This is where institutional erasure becomes particularly visible. My theory integrated two competing frameworks that were later proven both to be correct. One would think that would enshrine my contribution in every neuroscience textbook. Instead, what happened is that Helmholtz and Hering each received credit for their portions of the synthesis, whilst the integrative act itself – the realisation that both frameworks operated at different levels of the system – was treated as obvious, as something that “emerged naturally” from the convergence of later research.

A man who performed that synthesis would have been credited with revolutionary insight. A woman who performed it was treated as if she had simply observed what was already obvious. The synthesis itself was made retroactively invisible.

I suspect this happened because I could not establish a research lineage. I had no students working in my laboratory, no researchers publishing papers that built on my work, no institutional successor to carry my ideas forward. The ideas were absorbed into the broader scientific community, but without a named source. Helmholtz had students and colleagues who ensured his work remained credited. Hering had the same. I was working largely alone, teaching unpaid classes, unable to build an institutional presence.

That is perhaps the deepest injustice: not that my ideas were wrong – they were not. But that I had no structure to keep my name attached to those ideas. An idea orphaned from its creator becomes common property. It is absorbed into the general knowledge of the field, and the creator fades away.

There is a famous anecdote where you allegedly wrote to Bertrand Russell claiming to be a solipsist and expressing surprise that there were no others. Russell repeated this as evidence of your eccentricity. Did that encounter happen?

Russell repeated it endlessly, didn’t he? Like an amusing anecdote at dinner parties. The solipsist philosopher who wrote to confess her lonely philosophy!

Yes, I did write to Russell, but the letter was perfectly satirical. I understood perfectly well what solipsism is – the philosophy that only one’s own mind exists – and the logical impossibility of a community of solipsists. My letter was a philosophical joke, a bit of wit directed at Russell and his intellectual pretensions. I was playing with paradox.

But my wit was read as confusion. Women’s intellectual humour is consistently misread by male colleagues as evidence of naïveté. A man writes something paradoxical and he is praised for his philosophical cleverness. A woman writes the same thing and she is dismissed as not understanding the subject matter. The same words; radically different interpretations depending on the sex of the author.

This is one of those small cruelties that accumulates over time. Russell’s anecdote became canonical – I was the eccentric woman professor, confused about basic philosophical concepts. But I was not confused. I was being deliberately clever, and my cleverness was weaponised against me as evidence of my unseriousness.

That’s infuriating. Did Russell ever acknowledge that he had misread the letter?

No. By the time such acknowledgment might have mattered, I was old, ill, and increasingly marginal to the philosophical establishment. Russell had moved on to other concerns. The anecdote persisted, detached from its author’s intent, taking on a life of its own.

This is one of the reasons I became so vocal in my later years about challenging attribution errors and plagiarism. When William Johnson began publishing work that resembled my antilogism without crediting me, I made noise. I wrote letters, I confronted him publicly. It was not enough to do good work; one had to actively insist on the recognition of that work, because the institutional machinery was designed to allow one’s contributions to be absorbed, anonymised, or reassigned to others.

I became exhausting, I’m sure. Women who fight for credit are always exhausting to the men around them. But I was not going to remain silent whilst my ideas were appropriated or allowed to drift into the common knowledge without attribution.

What advice would you offer to women entering science today – women who might face some of the barriers you encountered?

First: the barriers are real, and recognising their reality is not pessimism. It is clarity. Do not internalise the fiction that merit alone determines advancement. Merit is necessary, but it is not sufficient. Structure matters. Institutional support matters. Whose name gets attached to ideas matters enormously.

Second: document your work obsessively. Publish it. Get it into the public record in your name. Do not rely on oral transmission or on the good faith of colleagues. Write it down. Date it. Make it impossible to erase or misattribute.

Third: build alliances with other women. We are more powerful together than isolated. I wish I had done more of this. I did some, but I was often too focused on my individual work to build the kind of systematic support network that might have changed the trajectory of my field.

Fourth: do not accept second-class credentials or honorary gestures as substitutes for genuine recognition. It is tempting to accept what is offered and be grateful. Refuse. Demand what is rightfully yours. This is exhausting and often futile, but it refuses the premise that women should be satisfied with scraps.

Fifth: move between disciplines if one discipline closes to you. This was partly forced upon me, but it also became a strength. Do not allow gatekeeping in one field to prevent you from making contributions in others. My ability to work across mathematics, logic, psychology, and biology meant I could pursue important questions even when certain institutions excluded me.

And finally: do not wait for vindication. Do not assume that the correctness of your ideas will eventually lead to recognition. Correctness is necessary but not sufficient. You must actively advocate for the importance of your work, you must ensure it remains visible, you must resist the gradual fading that befalls women scholars. I spent too many years hoping that good work would eventually be acknowledged. It is more effective to demand acknowledgment actively.

Is there anything you wish had been different? Any decision or moment you would alter if you could?

I wish I had refused Johns Hopkins’ initial offer. Not because I regret studying there – I learned a great deal from Sylvester and others. But I wish I had had the power to refuse, to demand genuine admission with full recognition and title, rather than accepting the humiliating half-membership they offered. Perhaps if I had, it would have changed the trajectory of women’s admission to graduate programmes at American universities.

I also wish I had been more strategic about building institutional power earlier. I was focused on the work itself – the intellectual problems fascinated me. But I should have been simultaneously focused on building the structures that would have allowed that work to endure. I should have been more aggressive about establishing research programmes, training students, creating an intellectual legacy that would have survived my death.

I wish I had married a man in a different field, or had never married at all. That is an unfair wish – Fabian was a good person and a good intellectual partner – but the reality is that marriage destroyed my institutional trajectory in ways it never would have destroyed his. It is a particular cruelty of the era that intellectual partnership and professional advancement were often in direct conflict for women.

And I wish, most of all, that the work itself had been sufficient. That correctness had been enough. That I did not have to spend so much energy fighting for recognition of the ideas themselves. That I could have simply done the work and trusted that the scientific community would evaluate it fairly.

But I could not trust that. And so I fought. And I will not pretend the fighting was not exhausting. But it was also necessary.

Thank you for your honesty. Is there a final thought you’d like to offer?

Only this: I am heartened to know that women are now entering mathematics, logic, neuroscience, and psychology in greater numbers. The barriers remain – I am told they are stubborn, intractable things – but women are present now in ways I was not. That presence itself changes things. It becomes harder to dismiss women’s ideas as peripheral when there are women working visibly in the field.

But I would also offer a warning: the machinery of erasure is patient. It does not require malice; it requires only inattention. Ideas by women will continue to be absorbed into the common knowledge without attribution unless women insist on credit. Disciplines will continue to exclude women from networks unless they actively resist the informal, smoke-filled gatekeeping.

The antilogism is still taught. Colour vision theory now centres on the principles I articulated. But my name is often absent from those conversations. That will not change unless the scientific community actively resists the default tendency toward forgetting. Attention must be paid.

Letters and emails

Following the interview, we invited our international community of mathematicians, neuroscientists, historians, philosophers, and science advocates to submit questions they wished to pose to Dr Ladd-Franklin. The responses were remarkable in their range and thoughtfulness – some probing the technical foundations of her work, others exploring the philosophical implications of her choices, and still others wondering about the roads not taken in her extraordinary life. We received correspondence from across continents, each letter reflecting a different facet of her legacy and the enduring questions her work raises for contemporary scholars.

Below are five letters – selected to represent diverse geographical perspectives, disciplinary interests, and the kinds of inquiries that continue to resonate nearly a century after her death. These questions invite Dr Ladd-Franklin to reflect on aspects of her intellectual journey that the initial interview only touched upon lightly: the epistemological foundations of her interdisciplinary work, the role that institutional exclusion played in shaping her intellectual trajectory, the technical decisions that guided her research, and what wisdom she might offer to those who follow in her path.

Rania Al-Sayed, 34, Computational Neuroscientist, Singapore

Your colour vision model proposed that neural opponent processing occurred downstream from the retinal cones – a claim that wasn’t confirmed until neurophysiological recordings became possible in the 1960s. I’m curious about your reasoning process: what specific behavioural or anatomical evidence made you confident enough to posit neural processing at a level you couldn’t directly observe? And looking at modern computational models of colour perception, do you see limitations in how we’ve built upon your framework that might benefit from returning to your original intuitions?

Dr Al-Sayed, your question is so insightful because it addresses the very thing that made the colour vision work both exhilarating and precarious. You ask what evidence gave me confidence to posit neural opponent processing at a level I could not directly observe. The answer is that I had no choice but to reason from behaviour and anatomy toward mechanism – and that this indirect method, whilst demanding, has advantages modern researchers may have forgotten.

The behavioural evidence came from multiple sources, each pointing toward the same conclusion. First, there were the phenomena of colour contrast and afterimages that Hering had documented so carefully. When you stare at a red patch and then look away, you see a green afterimage. When you view a grey square surrounded by yellow, the grey appears bluish. These opponent relationships are extraordinarily robust – they appear in every observer, across cultures, across lighting conditions. They are not learned associations; they are structural features of perception itself.

But here is what troubled me: if these opponent processes were happening at the level of the photoreceptors themselves – if the cones were already organised into red-green and blue-yellow opposing pairs – then we should observe certain asymmetries in colour matching that we do not, in fact, observe. When subjects perform colour-matching experiments using mixtures of red, green, and blue lights, the mathematics of the matches are remarkably clean. They follow what we would call linear transformations. Three independent variables – the intensities of the three primaries – are sufficient to match any visible colour. This suggested to me that the photoreceptors were operating independently, sending three separate signals forward.

Yet the opponent phenomena persisted. So the question became: where does the transformation from trichromatic independence to opponent organisation occur? It had to be downstream. Somewhere between the retina and conscious perception, the visual system was recoding three independent signals into opponent pairs.

The anatomical evidence supported this. The optic nerve – the bundle of fibres carrying signals from the eye to the brain – contains far more nerve fibres than there are photoreceptors. This suggested that substantial processing and recombination was occurring even before signals reached the brain proper. Moreover, the retina itself is not merely a passive receptor array; it contains multiple layers of interconnected cells. Horizontal cells, bipolar cells, amacrine cells – these structures hinted at complex processing happening locally before signals even left the eye.

But the most compelling evidence was comparative. When one examines colour vision across species – and I spent considerable time reading the work of comparative anatomists – one finds that the distribution of cone types varies considerably. Some species have two cone types, some three, some four. Yet in every case where colour vision exists, one finds evidence of opponent processing in behaviour. The opponent organisation appears to be a universal principle of how nervous systems interpret chromatic information, regardless of how many receptor types feed into that interpretation.

This suggested to me that opponent processing was not a feature of the receptors themselves but rather a computational strategy employed by neural circuits. The receptors provide raw chromatic data; the brain reorganises that data into a form more useful for detecting chromatic boundaries, identifying objects, and making perceptual judgements. The transformation is neural, not photochemical.

Now, you ask whether I see limitations in how modern computational models have built upon this framework. I do. The models I have examined since my death tend to treat opponent processing as a fixed transformation – three cone signals are linearly recombined into three opponent channels (red-green, blue-yellow, and luminance), and that is the end of the story. But this is too rigid.

What I suspected – and what I believe remains underexplored – is that the opponent organisation is adaptive. The visual system does not simply apply a fixed transformation to cone signals. Rather, it adjusts the strength and balance of opponent processing depending on context: the ambient illumination, the chromatic statistics of the scene, the observer’s attentional state, even the evolutionary history of the observer’s species.

Consider colour constancy – the phenomenon that a red apple appears red whether viewed in sunlight, in shadow, or under artificial illumination, despite the fact that the wavelength composition of the light reaching your eye changes dramatically across these conditions. This constancy requires that the opponent channels be dynamically tuned to discount the illuminant. The transformation from cone signals to opponent signals cannot be fixed; it must be flexible, sensitive to context.

Modern models tend to underestimate this flexibility because they are built to match psychophysical data from carefully controlled laboratory experiments – neutral backgrounds, stable lighting, brief presentations. But the visual system evolved to operate in natural environments where chromatic context is constantly shifting. I believe the opponent processing machinery is far more sophisticated than current models capture – not merely a fixed recoding of cone signals, but an active inference process that adjusts its parameters to maintain perceptual stability across wildly varying conditions.

This is speculative, of course. But it is grounded in the observation that biological systems are rarely as rigid as the mathematical models we construct to describe them. The elegance of the model – three cones, three opponent channels, clean linear transformations – may be obscuring messier, more interesting truths about how the visual brain actually operates.

If I were conducting research today, I would focus on measuring opponent processing under varying contexts: different illuminants, different chromatic surrounds, different tasks. I suspect one would find that the “opponent channels” are not fixed entities but rather dynamic patterns of neural activity that reconfigure themselves moment to moment. That would be a return to my original intuition: that the visual system is not a passive transformer of signals but an active interpreter of chromatic information, shaped by evolutionary pressures to extract the most useful perceptual structure from ambiguous sensory data.

Joaquín Salvatierra, 41, Philosopher of Logic, Buenos Aires

The antilogism reduced fifteen syllogistic forms to a single principle of inconsistency. But I wonder whether you encountered resistance to this unification from colleagues who believed the diversity of syllogistic forms carried philosophical significance – that each form revealed something distinct about how reasoning works. How did you respond to arguments that your elegance might have obscured important nuances? And do you think there are domains where multiple, irreducible forms might actually be more truthful than a single unified principle?

Professor Salvatierra, you have identified precisely the philosophical tension that animated the most heated debates I had with my contemporaries – and indeed, with myself. Yes, I encountered considerable resistance to the antilogism, though not always articulated in the terms you suggest. The resistance took several forms, and each revealed something about what logicians believed they were doing when they reasoned.

The first objection came from those trained in the medieval tradition – scholars who had memorised Barbara, Celarent, Darii, Ferio as schoolboys and who regarded the catalogue of valid forms as part of the inherited architecture of thought itself. To these men, the fifteen forms were not arbitrary. They represented distinct modes of reasoning, each with its own character and application. Barbara, the universal affirmative syllogism, felt different to them than Baroco, which requires reasoning by contradiction. The reduction of all forms to a single principle of inconsistency seemed to these scholars like a loss – as though I were claiming that all Gothic cathedrals could be reduced to the same engineering principles and were therefore identical.

I sympathised with this objection more than I admitted at the time. There is something aesthetically and pedagogically valuable in recognising that arguments have different shapes. A student learning logic benefits from seeing that one can reason from universal premises to universal conclusions, or from particular premises through careful steps to particular conclusions, or by assuming the opposite and deriving a contradiction. These feel like different intellectual moves, and there is pedagogical value in naming and practising them separately.

But – and here is where I could not yield – the question is not whether the forms feel different but whether they are foundationally different. My claim was that all valid syllogistic reasoning reduces to a single principle: the recognition of inconsistency among propositions. The fifteen forms are not fifteen independent principles; they are fifteen manifestations of one principle operating under different initial conditions. To confuse the manifestations with the principle is to mistake the surface for the structure.

Let me offer an analogy from my colour vision work. One can describe the full spectrum of visible colours by naming them: crimson, vermillion, scarlet, rose, coral, salmon, and so forth. Each name captures a distinct perceptual experience, and there is value in preserving these distinctions – they allow us to communicate subtle chromatic differences. But the underlying reality is that all these colours are generated by three types of cone receptors responding to different wavelengths of light. The multiplicity of colour names does not mean there are a multiplicity of fundamental mechanisms. The diversity is real at the level of experience; the unity is real at the level of mechanism.

So it is with syllogistic forms. The diversity of forms is real at the level of pedagogical experience – students encounter different kinds of problems, different arrangements of premises, different conclusions to be drawn. But the unity is real at the level of logical structure. All valid reasoning in this domain reduces to the principle of non-contradiction. The antilogism simply makes that unity explicit.

The second objection was more philosophical and cut deeper. Several of my colleagues – particularly those influenced by Hegel and the German idealists – argued that formal logic itself was an abstraction that obscured the dynamic, dialectical character of actual reasoning. They suggested that by reducing reasoning to the mechanical testing of inconsistency, I was draining logic of its content, turning it into an empty calculus that could say nothing about truth, only about formal validity. To them, the diversity of syllogistic forms reflected something about the unfolding of thought itself – different forms corresponded to different stages or movements in the dialectical process.

This objection I rejected entirely. It confuses logic with metaphysics. Logic is not concerned with how thought unfolds historically or dialectically. It is concerned with which inferences are valid – with which conclusions follow necessarily from which premises. The antilogism performs this task with maximum efficiency and transparency. If one wishes to theorise about the dialectical development of ideas, one is welcome to do so, but that is a separate enterprise. Logic provides the tools for testing whether particular inferences are sound. It does not and should not aspire to capture the “essence” of thought or the “nature” of reality.

Now, to your question about whether there are domains where multiple irreducible forms might be more truthful than a single unified principle. This is a genuine question, and I have thought about it considerably.

In modal logic – the logic of necessity and possibility – there may indeed be irreducible plurality. The rules governing “it is necessary that P” and “it is possible that P” do not reduce cleanly to the principle of non-contradiction alone. One requires additional axioms about the relationships between necessity, possibility, and actuality. These axioms are not self-evident in the way the principle of non-contradiction is. Different modal systems adopt different axioms, and there is genuine philosophical disagreement about which system correctly captures our intuitions about necessity.

Similarly, in ethical reasoning, I am not convinced that all valid moral inferences reduce to a single principle. Deontological reasoning – reasoning from duties and rules – operates differently from consequentialist reasoning – reasoning from outcomes and utilities. One might argue that these are not truly different logical forms but rather different premises fed into the same logical machinery. But I am not certain. It may be that ethical reasoning requires multiple, irreducible principles that cannot be unified without loss.

So yes, I grant that there may be domains where pluralism is warranted. But syllogistic logic is not one of them. The principle of non-contradiction is sufficient to ground all valid syllogistic inference. The antilogism demonstrates this. The appearance of diversity – the fifteen forms – is an artefact of how we arrange premises and conclusions, not a reflection of fundamental logical pluralism.

I would add one final thought. Elegance in logic is not merely aesthetic; it is epistemological. When we reduce complexity to simplicity, we gain understanding. The student who memorises fifteen forms has learned to perform fifteen tests. The student who grasps the antilogism has learned why those fifteen forms are valid. The latter student possesses knowledge; the former possesses technique. Both are valuable, but they are not equivalent.

That said, I do not wish to be dogmatic. If you can demonstrate a domain of valid reasoning that genuinely resists reduction to simpler principles – where the plurality is not merely apparent but real – I would be most interested to examine it. I have always believed that intellectual humility requires openness to counter-evidence. But in the case of syllogistic logic, I remain convinced: the unity is real, and the antilogism reveals it.

Tania Dimitriou, 29, Historian of Science and Mathematics, Athens

You worked across four distinct disciplines – mathematics, logic, psychology, and biology. But I’m intrigued by how each field had its own conventions for proof, evidence, and what counted as rigorous. When you moved from Boolean logic to experimental colour vision work, how did you translate your standards of rigor across those boundaries? Did you find yourself having to learn entirely new epistemologies, or did you discover underlying principles that connected how mathematicians and experimental psychologists validated knowledge?

Dr Dimitriou, you have asked perhaps the most difficult question anyone has posed to me – the question I have been avoiding for forty years. How does one translate standards of rigour across disciplinary boundaries when each discipline operates according to different epistemological conventions? The honest answer is: with great difficulty, and never perfectly.

When I began my work in mathematical logic at Johns Hopkins, I was operating within a tradition that valued formal demonstration. A valid proof was one that began with axioms and proceeded through logically necessary steps to a conclusion. There were no exceptions, no probabilities, no appeals to empirical observation. Either the proof was airtight or it was not. The standard was binary: valid or invalid. Charles Sanders Peirce worked within this framework, and it shaped my early thinking profoundly.

But when I moved into experimental psychology and colour vision research, I entered an entirely different world. Experimental psychology in the 1890s was asserting itself as a rigorous science, but the rigour looked nothing like mathematical rigour. One conducted experiments on human subjects – dozens or hundreds of them. One measured variations in their responses. One computed averages and attempted to identify patterns. One could never achieve the certainty of mathematical proof because human perception is variable, contingent, shaped by individual differences and contextual factors.

The shock of this transition was genuine. I remember sitting in Hermann von Helmholtz’s laboratory in Berlin in 1891, watching experimenters test subjects’ colour vision, and feeling almost disoriented. Where were the axioms? Where was the logical necessity? Instead, there were error measurements, statistical distributions, and careful caveats about the limitations of one’s conclusions. It felt less rigorous, more tentative. And yet – and this is crucial – it was not less rigorous in any absolute sense. It was rigorous in a different mode.

I came to understand that rigour is not a monolithic standard but rather a discipline-specific practice of justification. Mathematical rigour involves logical necessity and formal demonstration. Experimental rigour involves controlled observation, measurement precision, and reproducibility. Physiological rigour involves anatomical accuracy and mechanistic plausibility. Evolutionary rigour involves explanatory power and consistency with observed distributions in nature. None of these is “more rigorous” than the others; they are rigorous in relation to the kinds of questions each discipline asks.

The translation between disciplines, then, did not require abandoning my standards so much as understanding what standards were applicable to what questions. When I wished to argue that the antilogism solved a problem in formal logic, I used mathematical demonstration. When I wished to argue that colour vision evolved in three stages, I used comparative anatomy, evolutionary theory, and psychophysical data. The standards shifted because the questions shifted.

But here is where it became genuinely complicated: I had to learn to think in multiple registers simultaneously. When arguing for my colour vision synthesis, I could not rely on the precision of mathematical proof, but I also could not accept the looser standards that some experimental psychologists seemed willing to tolerate. I demanded that my colour-matching experiments be rigorous in their execution – careful controls, standardised stimuli, adequate sample sizes – but I also demanded that the theoretical interpretation of those experiments maintain logical coherence. I was not content to say, “The data show a trend toward X”; I required that X be theoretically intelligible and consistent with what we understood about anatomy and evolution.

This meant that I was constantly translating back and forth. I would take observations from the laboratory and ask: What logical structure must underlie these observations? I would take anatomical facts and ask: What evolutionary process could have produced this distribution? I would take theoretical principles from logic and ask: What experimental predictions follow from this principle?

The process was iterative and sometimes messy. I made mistakes – I over-interpreted data, I drew conclusions that were stronger than the evidence warranted, I occasionally allowed theoretical elegance to outweigh empirical caution. My evolutionary hierarchy of colour vision, which I described as moving from achromatic to blue-yellow to red-green, was partly inference, partly speculation. I was constructing a narrative that fit the available evidence, but I was not merely reporting that evidence.

I came to believe that this translation between epistemologies is not a liability but rather an intellectual asset. It forces one to question the assumptions buried within each discipline’s standards of rigour. Mathematicians often assume that formal proof is the gold standard without asking whether formal systems capture the phenomena they purport to describe. Experimentalists often assume that empirical observation speaks for itself without asking what theoretical interpretations are possible.

By working across these boundaries, I was forced to ask: What makes an argument in mathematics convincing? What makes an argument in experimental psychology convincing? Are these standards actually incompatible, or are they simply different? I became convinced that they are different – that they operate according to different criteria – but not incompatible. One can be rigorous in mathematical demonstration and rigorous in experimental design and rigorous in theoretical integration.

The price of this intellectual mobility was that I never belonged entirely to any single discipline. Mathematicians saw my logic work as interesting but secondary – I was also doing psychology, so perhaps not a “serious” mathematician. Psychologists saw my work as theoretically sophisticated but perhaps not sufficiently grounded in empirical detail – I was also working in logic and physiology, so perhaps not a “proper” experimentalist. Philosophers found my work technical but sometimes too focused on detail to engage with broader philosophical questions.

But I have come to regard this partial belonging as itself a form of rigour – the rigour of refusing to confine oneself within disciplinary boundaries when important questions spanned multiple fields. The cost was professional precariousness. The benefit was the possibility of synthesis that could not have emerged from within any single discipline.

To your question about whether I discovered underlying principles connecting how mathematicians and experimental psychologists validate knowledge: yes, I did, though I would not overstate their universality. The deepest principle, I believe, is consistency. Mathematical proofs are valid because they maintain logical consistency – one cannot derive contradictions from sound axioms. Experimental findings are trustworthy because they are reproducible – they maintain consistency across repeated observations and different observers. Theoretical frameworks are compelling because they integrate observations without internal contradiction.

Consistency is not sufficient for truth – one can construct internally consistent but empirically false theories, or logically valid but trivial proofs. But consistency is necessary. In that sense, the principle I identified in logical validity – the principle of non-contradiction – operates across all these domains. Mathematicians, experimentalists, and theorists all reject claims that are internally inconsistent or that contradict established evidence. The form of the consistency varies, but the commitment to it remains constant.

This discovery did not come easily, and I am still reflecting upon it. But I believe it justified the intellectual wandering that defined my career. By refusing to remain confined within a single discipline, I could perceive connections that specialists within each field might miss. The price of that vision was institutional marginality. Whether it was worth the price, I will leave to others to judge.

Trevor Langston, 52, Logic Educator and Curriculum Designer, Toronto

If Johns Hopkins had granted you your PhD in 1882 and offered you a genuine faculty position, do you think you would have remained focused on logic, or might institutional security have freed you to explore the interdisciplinary synthesis – logic, psychology, neuroscience – that became your most significant contribution? In other words, was your exile from traditional academia actually generative? Would you have become a narrower scholar if you’d been welcomed into the establishment?

Mr Langston, you have posed what I might call the “counterfactual of consolation” – the question one asks oneself in difficult moments, wondering whether exclusion was a disguised blessing. I have asked it myself, and I have come to a complicated answer that I suspect will disappoint anyone seeking a neat moral.

The honest response is: I do not know. And I have lived long enough to understand that this uncertainty is itself important.

If Johns Hopkins had granted me my doctorate in 1882 and offered a genuine faculty position – say, as an Associate Professor of Logic or Mathematics – would I have remained narrow in my disciplinary focus? It is possible. A secure position in mathematics would have provided stable employment, a research budget, graduate students, and the institutional authority to shape a field. Under such circumstances, one typically specialises. One establishes oneself as the leading authority in a particular domain. I might have become the preeminent logician in America – the person everyone cited when discussing syllogistic validity or the algebra of logic.

That would have been a legitimate intellectual life. It would have been more secure financially, more prestigious professionally, more influential within academic circles. I might have trained a generation of logicians who carried forward my antilogism and ensured that my name remained attached to that contribution. The mathematics would have been rigorous, probably more systematic than what I actually produced whilst juggling unpaid teaching positions and financial precarity.

But – and here is the crucial uncertainty – I cannot claim with confidence that I would have undertaken the colour vision research at all. The colour vision work began precisely because I had time that a tenured professor does not typically possess. I was not bound to a department’s teaching schedule. I was not expected to publish at a specific rate or to train a specific number of doctoral students. I could pursue intellectual curiosity without immediate professional consequence.

When I decided to go to Germany in 1891 to study with Helmholtz and Müller, it was not a strategically calculated move within an academic career. It was an intellectual adventure motivated by genuine curiosity about a problem that fascinated me. Had I been a tenured professor at Johns Hopkins, would I have been able to absent myself for a year to study in foreign laboratories? Possibly. But there would have been professional pressures against it – the expectation to remain present, to maintain one’s teaching obligations, to attend departmental functions. Academic security often brings with it a loss of intellectual freedom, even as it provides resources.

Furthermore – and this is something I have observed in male colleagues who achieved the security I was denied – professional success often leads to a kind of intellectual conservatism. Once one has established oneself as an authority in a field, there is considerable pressure to defend that established position rather than to venture into novel territory. I had the luxury of obscurity, which meant I had the luxury of changing my mind, of pursuing new questions, of admitting uncertainty without professional cost.

I cannot claim that exclusion was “generative” in some abstract sense – that framing sanitises the genuine harm done to me. But I can say this: the particular intellectual path I followed, the breadth of my work, the integration of logic, psychology, and biology – this path may not have been possible within institutional constraints that successful academic women would have faced.

There is another consideration, which troubles me even now. Institutional security in the late nineteenth century meant conformity. Had I been accepted as a regular faculty member at Johns Hopkins, I would have been expected to behave in certain ways – to be deferential to senior colleagues, to avoid public controversy, to represent the institution’s values and respectability. The women who did achieve academic positions during this era often did so by accepting considerable constraints on their behaviour and their intellectual expression.

By contrast, my marginal position gave me a certain freedom to be combative, to challenge established ideas, to refuse easy accommodations. When William Johnson published work resembling my antilogism without proper attribution, I publicly shamed him. I did not calculate the professional consequences because I had limited professional standing to begin with. A tenured professor might have engaged in quiet correspondence; I wrote letters published in academic journals. My lack of institutional power paradoxically gave me a certain freedom to speak.

But I do not wish to romanticise this. The freedom purchased by exclusion is a poor substitute for the resources that institutional power provides. I could speak freely partly because I was not expected to speak at all – my voice was not taken seriously anyway. That is not genuine intellectual freedom; it is invisibility.

The question you ask assumes that there is a causal relationship between institutional exclusion and intellectual breadth – that the former produced the latter. I suspect the relationship is more complex. I am, by temperament, a boundary-crosser. I am intellectually restless, unable to confine myself within disciplinary categories. Perhaps institutional security would have constrained this restlessness; perhaps I would have resisted such constraint and found ways to pursue broad inquiry despite institutional pressure.

What I can say with confidence is this: the path I actually took – teaching unpaid classes, conducting research in multiple fields, publishing across disciplines, remaining perpetually on the margins – allowed me to ask certain questions that might otherwise have remained unasked. The integration of colour vision theories came from my ability to move fluidly between anatomical observation, psychophysical experiment, and evolutionary reasoning. That fluidity was easier to achieve when I was not anchored to a single department’s expectations.

If I had achieved institutional security early, I might have become a narrower, more specialist scholar – but I also might have become a more influential one. My ideas might have had greater impact if they had been systematically developed within a research programme with resources and students. The antilogism might have been more thoroughly elaborated and integrated into logic education if I had had a laboratory where students learned the method. My colour vision synthesis might have been tested more rigorously if I had access to funding and equipment.

So to your question about whether exclusion was “generative”: partially, perhaps, but at too great a cost. I was freed to be intellectually broad, but I was not given the resources to develop that breadth rigorously. I could ask important questions, but I lacked the institutional support to answer them definitively. The intellectual life I led was rich in some respects and impoverished in others.

I would not wish this path on any woman. The security and resources that male colleagues took for granted would have been immensely valuable. But neither do I wish to claim that I achieved nothing, or that my work was diminished by exclusion. Rather, I would say that I achieved different things than I would have achieved within institutional security – neither better nor worse, but genuinely different.

The greatest tragedy is not that exclusion happened to produce breadth, but that it need not have happened at all. A woman could have been granted security and intellectual freedom, resources and the autonomy to pursue varied questions. The fact that these goods were presented as mutually exclusive – that security required conformity, that freedom came only through marginality – reveals the deeper injustice of the system in which I worked.

That is what I would want you to understand. Not that my suffering was justified or redemptive, but that it was unnecessary. The same intellectual work could have been done, probably more effectively, had I been granted the same opportunities extended to male scholars without question.

Samira El-Hassan, 38, Gender Studies Scholar and Science Policy Advocate, Cairo

Your marriage to Fabian Franklin became a career barrier through anti-nepotism rules, yet you describe him as a genuine intellectual partner. I’m wondering whether, in retrospect, you see any way the partnership could have been structured differently – institutionally or personally – that might have allowed both of you to flourish. Or do you think the problem was simply intractable within the constraints of the era? What advice would you give to contemporary couples in academia navigating similar tensions?

Dr El-Hassan, you ask the question that has haunted me more than any other – not about my work, but about my life. Could the partnership with Fabian have been structured differently? Could we have found some arrangement that allowed both of us to flourish professionally? Or was the problem simply intractable within the constraints of our era?

I have turned this question over in my mind for decades, and I still do not have a satisfying answer. But let me be candid about what the possibilities were, and what the costs would have been.